Have you ever wondered how to simplify and understand complex mathematical structures like symmetric matrices?

Jenn, Founder Calcworkshop®, 15+ Years Experience (Licensed & Certified Teacher)

Diagonalization of symmetric matrices is like solving a Rubik’s Cube, where each twist and turn brings you closer to the neatly organized and aligned colors.

By breaking down the complexities of symmetric matrices into more manageable components, we can extract valuable insights and solve problems more efficiently.

So, let’s jump in and solve this puzzle one step at a time.

Introduction to Symmetric Matrices

Ready? First, let’s understand the basics.

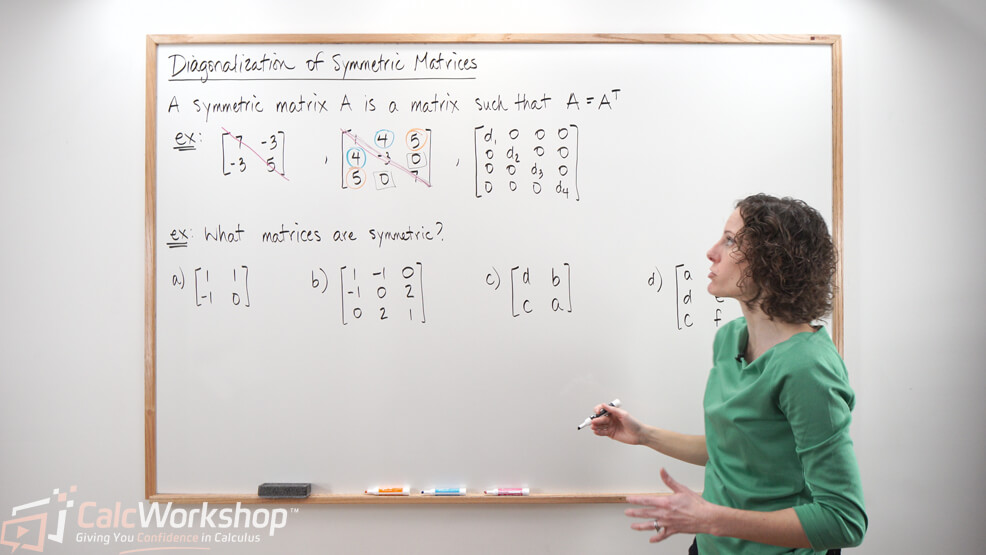

If a square matrix

This means that the main diagonal entries are arbitrary values, but its other entries occur in pairs on either side of the main diagonal.

For example, the following

As you can see,

And if you’ve ever studied graph theory or taken discrete mathematics, you may recognize that a symmetric matrix is sometimes called an incident matrix.

Besides a symmetric matrix being “symmetric,” what’s so special about it?

Properties of Symmetric Matrices

Let’s look at a few properties related to symmetric matrices:

- The product of any matrix and its transpose is symmetric. That is, both

- If

- The sum of two symmetric matrices is also symmetric.

- Eigenvectors corresponding to distinct eigenvalues are orthogonal.

Diagonalization of Symmetric Matrices

And it’s this last property that gives us two compelling theorems regarding the diagonalization of symmetric matrices:

- If

- Additionally, an

Now, that’s pretty special! Because if we have a symmetric matrix, we can quickly determine that it’s diagonalizable.

Orthogonal Diagonalization Example

Let’s look at an example where we will orthogonally diagonalize a symmetric matrix and give an orthogonal matrix

First, we will find our characteristic polynomial and solve the equation to find our eigenvalues.

Because we have three distinct eigenvalues, and matrix

If

Therefore, our eigenspace is

Now we have to ensure that each pair of vectors are orthogonal.

Now that we have shown that our eigenspace is orthogonal, we must normalize each vector to create our orthonormal P matrix.

Thus, our P matrix, which consists of normalized eigenvectors, and our D matrix, which is the diagonal matrix representing the eigenvalues, is as follows:

And we can verify the accuracy of our answer by showing

But here’s something very interesting …Look at the two matrices

This is incredible because if matrix

Cool!

Next Steps

Together, you will:

- Learn to identify symmetric matrices and determine how to use them for identifying conic sections with cross product terms, where two variables are being multiplied together, such as “xy.”

- Discover that to identify the conic section without looking at the graph, you need to analyze the function’s eigenvalues and eigenvectors in order to rotate the conic to a new set of axes – an orthogonal set!

- Explore several theorems and definitions, and review the Gram-Schmidt process to understand the steps needed to diagonalize a symmetric matrix.

- Delve into the Spectral Theorem for Symmetric Matrices and Spectral Decomposition.

Let’s dive in and get started!

Video Tutorial w/ Full Lesson & Detailed Examples

Get access to all the courses and over 450 HD videos with your subscription

Monthly and Yearly Plans Available

Still wondering if CalcWorkshop is right for you?

Take a Tour and find out how a membership can take the struggle out of learning math.