What if the linear matrix equation

Jenn, Founder Calcworkshop®, 15+ Years Experience (Licensed & Certified Teacher)

Well, we would need to find the best approximate solution. And to do this, we will use the method of least squares.

Don’t worry. You have seen this idea before.

Remember back in Algebra how we created lines of “best fit”? Well, best-fit lines, sometimes called linear regression lines or trend lines, are just like least squares solutions, as they are a best approximate solution for an inconsistent matrix equation

Least Squares Definition and Normal Equations

So, our goal is to estimate a solution for a system that cannot be solved algebraically.

How?

Well, knowing that we can’t solve the equation

So, the smaller the distance between

Recall that the distance between two vectors, denoted

- Okay, so our definition states that if we let

- Additionally, the set of least-squares solutions of

Methods for Finding Least Squares Solutions

In other words, as long as matrix

using row reduction to find a consistent matrix

from QR factorization

Example: Row Reduction Method

Alright, let’s work through an example together.

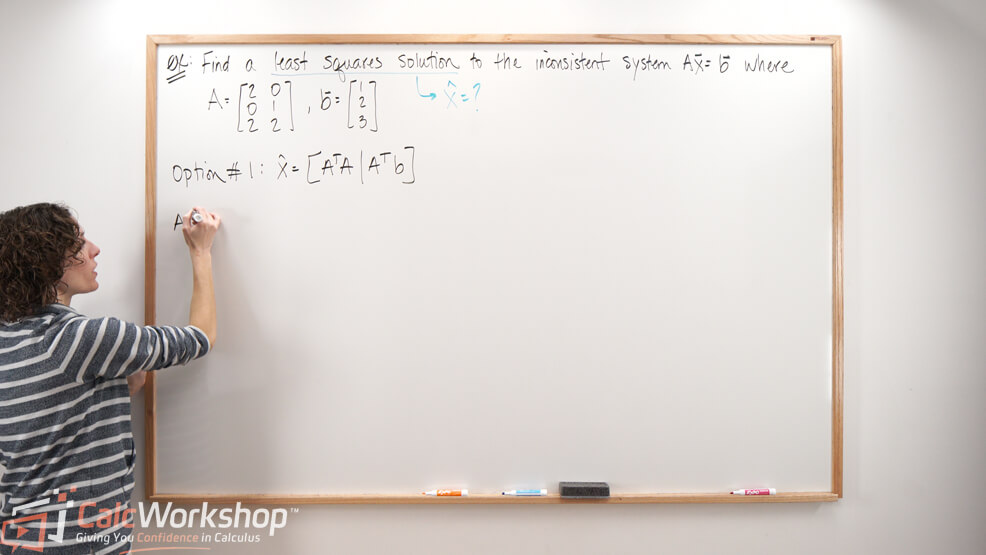

Find the least-squares solution of the inconsistent system

First, we will calculate the matrix multiplication for

Next, we will create an augmented matrix and use row echelon techniques to find a consistent system.

Consequently, the general least-squares solution and the best approximation to the system is.

I have found that using row reduction is the quickest way to find the least squares solution, but as we will see in our video, using inverses and QR factorization are also excellent choices when the problem allows for it.

Orthogonal Projections Method

In fact, there is a fourth method for finding the least squares solution when we are given an orthogonal basis. This process involves orthogonal projection of

Example: Orthogonal Projections Method

For example, let’s find the least-squares solution of

First, we notice that the columns of

Therefore, we can find the orthogonal projection of

If we recall from our previous lessons, the formula we need is

So, let’s find the orthogonal projection by computing our dot products.

Now let’s substitute our values into our projection formula.

And finally, now that

Because if we let

So, the general solution to our least-squares problem is

Not bad, right?

Next Steps

Together, we will work through numerous examples to find the least-square solution. We’ll use various methods, such as:

- Row reduction

- Inverses

- QR factorization

- Orthogonal projections

Additionally, we’ll find the error for our approximation.

It’s going to be fun, so let’s get to it!

Video Tutorial w/ Full Lesson & Detailed Examples

Get access to all the courses and over 450 HD videos with your subscription

Monthly and Yearly Plans Available

Still wondering if CalcWorkshop is right for you?

Take a Tour and find out how a membership can take the struggle out of learning math.