Have you noticed that cities are built in grids?

Jenn, Founder Calcworkshop®, 15+ Years Experience (Licensed & Certified Teacher)

And how cities have developed a “block” principle, which indicates the space between one cross street and the next? These city blocks subdivide a city into smaller rectangular areas with varying sizes and help with orientation, direction, and even cultural and social promotion.

Well, here’s an interesting tidbit: a matrix can be partitioned in a similar manner as a city block.

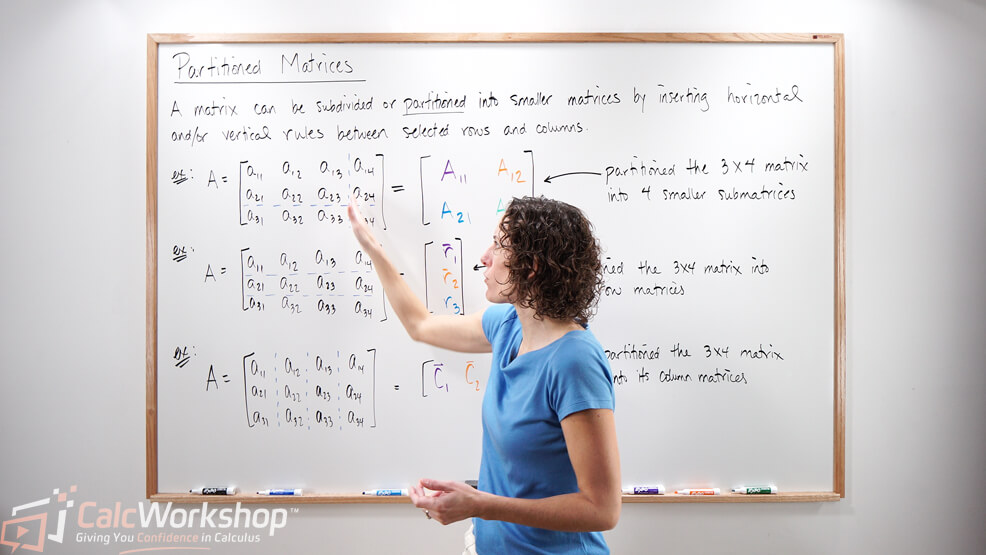

What is a Partitioned Matrix?

In fact, a partitioned matrix is a division of a matrix into smaller rectangular matrices called submatrices or blocks. This is why a partitioned matrix is sometimes called a block matrix.

But why is partitioning a matrix necessary? They aren’t cities, so what’s the point?

Why Partition a Matrix?

Partitioned matrices often appear in modern applications of linear algebra because the notation simplifies many discussions and highlights essential structure in matrix calculations, especially when dealing with matrices of great size.

Consequently, being able to subdivide, or block, a matrix using horizontal and vertical rules creates compatible smaller matrices to make things easier for us to use.

Example of Matrix Partitioning

A matrix can be partitioned in various ways. For example, suppose we are given the following matrix.

\begin{align*}

A=\left[\begin{array}{cccccc}

1 & -3 & 0 & 4 & 2 & -5 \\

-2 & 9 & 5 & -1 & 0 & 3 \\

-4 & 7 & 1 & 3 & -6 & -8

\end{array}\right]

\end{align*}

We can partition the matrix into submatrices or blocks as follows.

\begin{align*}

A=\left[\begin{array}{ccc|cc|c}

1 & -3 & 0 & 4 & 2 & -5 \\

-2 & 9 & 5 & -1 & 0 & 3 \\

\hline-4 & 7 & 1 & 3 & -6 & -8

\end{array}\right]

\end{align*}

Such that \(A=\left[\begin{array}{lll}A_{11} & A_{12} & A_{13} \\ A_{21} & A_{22} & A_{23}\end{array}\right]\) whose entries are the “blocks”

\begin{aligned}

A_{11} & =\left[\begin{array}{ccc}

1 & -3 & 0 \\

-2 & 9 & 5

\end{array}\right], \\

A_{12} & =\left[\begin{array}{cc}

4 & 2 \\

-1 & 0

\end{array}\right], \\

A_{13} & =\left[\begin{array}{c}

-5 \\

3

\end{array}\right], \\

A_{21} & =\left[\begin{array}{lll}

-4 & 7 & 1

\end{array}\right], \\

A_{22} & =\left[\begin{array}{ll}

3 & -6

\end{array}\right], \\

A_{23} & =[-8]

\end{aligned}

Various Ways to Partition a Matrix

But we could have just as easily divided matrix A into various partitions. The divisions or submatrices are determined by what the analysis dictates for the linear system and data. Consequently, we could have just as easily divided the above matrix \(\mathrm{A}\) as follows.

\begin{align*}

A=\left[\begin{array}{cc|cccc}

1 & -3 & 0 & 4 & 2 & -5 \\

\hline-2 & 9 & 5 & -1 & 0 & 3 \\

-4 & 7 & 1 & 3 & -6 & -8

\end{array}\right]

\end{align*}

What is important with partitioned matrices is that we have taken a “large” matrix and made it “smaller” or compact using partitions. So, instead of referring to a matrix by its general size where entries represent numbers, each entry in a partitioned matrix represents a matrix.

Notation for Partitioned Matrices

Pay close attention to the notation, as it can be a bit confusing. When partitioning a matrix, much like when we partition a set, we use capital letters representing each block or submatrix and subscripts indicating its location.

For example, let’s name each partition and dimensions if

\begin{align*}

A=\left[\begin{array}{cc|c}

2 & 1 & 0 \\

-3 & 5 & -4 \\

1 & -2 & -2 \\

\hline 0 & 3 & 7 \\

8 & 6 & -9

\end{array}\right]

\end{align*}

First, we notice that matrix \(\mathrm{A}\) is divided into 4 blocks, such that \(A=\left[\begin{array}{cc}A_{11} & A_{12} \\ A_{21} & A_{22}\end{array}\right]\) where the first number in the subscript indicates the row and the second number identifies the column.

Now we can name each submatrix and indicate its dimension:

\begin{aligned}

A_{11} & =\left[\begin{array}{cc}

2 & 1 \\

-3 & 5 \\

1 & -2

\end{array}\right], 3 \times 2 \\

A_{12} & =\left[\begin{array}{c}

0 \\

-4 \\

-2

\end{array}\right], 3 \times 1 \\

A_{21} & =\left[\begin{array}{cc}

0 & 3 \\

8 & 6

\end{array}\right], 2 \times 2 \\

A_{22} & =\left[\begin{array}{c}

7 \\

-9

\end{array}\right], 2 \times 1

\end{aligned}

Operations with Partitioned Matrices

Alright, so now it’s time to talk about what we can do with partitioned matrices.

If matrices are the same size and are partitioned in precisely the same way, then we can multiply by scalars and add or subtract partitioned matrices the same way we would with ordinary matrix sums and differences, except each block of \(A+B\) is a matrix sum For example, let \(A=\left[\begin{array}{ll}A_{11} & A_{12} \\ A_{21} & A_{22}\end{array}\right]\) and \(B=\left[\begin{array}{ll}B_{11} & B_{12} \\ B_{21} & B_{22}\end{array}\right]\) be partitioned matrices, then

\begin{aligned}

A+B & =\left[\begin{array}{ll}

A_{11}+B_{11} & A_{12}+B_{12} \\

A_{21}+B_{21} & A_{22}+B_{22}

\end{array}\right] \\

A-B & =\left[\begin{array}{ll}

A_{11}-B_{11} & A_{12}-B_{12} \\

A_{21}-B_{21} & A_{22}-B_{22}

\end{array}\right] \\

c A+d B & =\left[\begin{array}{ll}

c A_{11}+d B_{11} & c A_{12}+d B_{12} \\

c A_{21}+d B_{21} & c A_{22}+d B_{22}

\end{array}\right]

\end{aligned}

Multiplying Partitioned Matrices

But how do we multiply partitioned matrices?

Well, if we can confirm the dimensions of matrices A and B match the required operation such that \((m \times n)(n \times p)=m \times p\), then we say the partitions of A and B are conformable for block multiplication. Such that

\begin{aligned}

\text{If }

A & =\left[\begin{array}{ll}

A_{11} & A_{12} \\

A_{21} & A_{22} \\

A_{31} & A_{32}

\end{array}\right] \text{ and } \\

B & =\left[\begin{array}{ll}

B_{11} & B_{12} \\

B_{21} & B_{22}

\end{array}\right] \text{, then } \\

A B & =\underbrace{\left[\begin{array}{ll}

A_{11} & A_{12} \\

A_{21} & A_{22} \\

A_{31} & A_{32}

\end{array}\right]}_{3 \times 2} \underbrace{\left[\begin{array}{ll}

B_{11} & B_{12} \\

B_{21} & B_{22}

\end{array}\right]}_{2 \times 2} \\

& =\underbrace{\left[\begin{array}{ll}

A_{11} B_{11}+A_{12} B_{21} & A_{11} B_{12}+A_{12} B_{22} \\

A_{21} B_{11}+A_{22} B_{21} & A_{21} B_{12}+A_{22} B_{22} \\

A_{31} B_{11}+A_{32} B_{21} & A_{31} B_{12}+A_{32} B_{22}

\end{array}\right]}_{3 \times 2}

\end{aligned}

We’ll work through examples for performing operations such as block multiplication in the video lesson, as it is easier than you can imagine. And we will discuss the Column-Row Expansion Theorem and how we can multiply two matrices by comparing column to row rather than row to column, as is customary.

Partitioned Matrices and Identity or Zero Matrix

But the usefulness of partitioned matrices can also be seen when we can identify the Identity matrix or the Zero matrix.

For example, suppose matrix A is as shown below.

\begin{align*}

A=\left[\begin{array}{llllll}

1 & 0 & 0 & 0 & 0 & 1 \\

0 & 1 & 0 & 0 & 0 & 1 \\

0 & 0 & 1 & 0 & 0 & 1 \\

0 & 0 & 0 & 1 & 0 & 0 \\

0 & 0 & 0 & 0 & 1 & 0 \\

1 & 1 & 1 & 0 & 0 & 1

\end{array}\right]

\end{align*}

Just looking at this matrix can make your eyes glass over. But if we partition it just right, we start to realize that it’s really not as bad as we first imagined. Look below:

\begin{aligned}

A &= \left[\begin{array}{ccc|cc|c}

1 & 0 & 0 & 0 & 0 & 1 \\

0 & 1 & 0 & 0 & 0 & 1 \\

0 & 0 & 1 & 0 & 0 & 1 \\

\hline 0 & 0 & 0 & 1 & 0 & 0 \\

0 & 0 & 0 & 0 & 1 & 0 \\

\hline 1 & 1 & 1 & 0 & 0 & 1

\end{array}\right] \\

& = \left[\begin{array}{ccc}

I_{3 \times 3} & 0_{3 \times 2} & A_{3 \times 1} \\

0_{2 \times 3} & I_{2 \times 2} & 0_{2 \times 1} \\

A_{1 \times 3}^{T} & 0_{1 \times 2} & 1

\end{array}\right] \\

& \text{where } A = \left[\begin{array}{c}

1 \\

1 \\

1

\end{array}\right]

\end{aligned}

Performing Operations with Block Matrices

Now, this partitioning is immensely helpful in the sense that we can now perform powers and operations with similar block matrices.

For example, suppose the matrices below are partitioned for block multiplication.

\begin{aligned}

& \left[\begin{array}{ll}

I & 0 \\

A & I

\end{array}\right]\left[\begin{array}{cc}

A & B \\

-C & 0

\end{array}\right]

\end{aligned}

We compute the product as follows.

\begin{aligned}

\left[\begin{array}{cc}

I & 0 \\

A & I

\end{array}\right]\left[\begin{array}{cc}

A & B \\

-C & 0

\end{array}\right] & = \left[\begin{array}{cc}

I(A)+0(-C) & I(B)+0(0) \\

A(A)+I(-C) & A(B)+I(0)

\end{array}\right] \\

& = \left[\begin{array}{cc}

A & B \\

A^{2}-C & A B

\end{array}\right]

\end{aligned}

Easy, right?

And multiply matrices that are partitioned comfortably for block multiplication will also help us to solve or find formulas for specific partitions or blocks in terms of others, as we will see in the video.

Block Diagonal Matrices and Inverses of Partitioned Matrices

Lastly, by reviewing the Invertible Matrix Theorem, let’s discuss Block Diagonal Matrices and Inverses of Partitioned Matrices. A partitioned matrix A is “block diagonal” if the matrices on the main diagonal are square and all other position matrices are zero.

\begin{aligned}

A = \left[\begin{array}{cccc}

D_{1} & 0 & \cdots & 0 \\

0 & D_{2} & \cdots & 0 \\

\vdots & \vdots & \ddots & \vdots \\

0 & 0 & \cdots & D_{k}

\end{array}\right]

\end{aligned}

And since all the matrices \(D_{1} D_{2}, \ldots, D_{k}\) are square matrices, then matrix \(\mathrm{A}\) is invertible if and only if each matrix on the diagonal is invertible

\begin{aligned}

A^{-1} &=\left[\begin{array}{cccc}

D_{1}^{-1} & 0 & \cdots & 0 \\

0 & D_{2}^{-1} & \cdots & 0 \\

\vdots & \vdots & \ddots & \vdots \\

0 & 0 & \cdots & D_{k}^{-1}

\end{array}\right]

\end{aligned}

Okay, let’s look at an example to help us make more sense of this.

Find \(\mathrm{A}\) inverse if \(\mathrm{A}\) is a block diagonal matrix

\begin{aligned}

A &=\left[\begin{array}{cc|cc|c}

1 & -1 & 0 & 0 & 0 \\

1 & 2 & 0 & 0 & 0 \\

\hline 0 & 0 & 5 & 4 & 0 \\

0 & 0 & 1 & 1 & 0 \\

\hline 0 & 0 & 0 & 0 & 2

\end{array}\right]

\end{aligned}

All we have to do is find the inverse of each block along the main diagonal, as the other blocks are 0.

\begin{aligned}

A &=\left[\begin{array}{cc|cc|c}

1 & -1 & 0 & 0 & 0 \\

1 & 2 & 0 & 0 & 0 \\

\hline 0 & 0 & 5 & 4 & 0 \\

0 & 0 & 1 & 1 & 0 \\

\hline 0 & 0 & 0 & 0 & 2

\end{array}\right] \\

& =\left[\begin{array}{ccc}

A_{11} & 0 & 0 \\

0 & A_{22} & 0 \\

0 & 0 & A_{33}

\end{array}\right]

\end{aligned}

\begin{aligned}

A_{11} &=\left(\begin{array}{cc}

1 & -1 \\

1 & 2

\end{array}\right) \\

& \Rightarrow A_{11}^{-1}=\frac{1}{3}\left(\begin{array}{cc}

2 & 1 \\

-1 & 2

\end{array}\right) \\

& =\left(\begin{array}{cc}

2 / 3 & 1 / 3 \\

-1 / 3 & 2 / 3

\end{array}\right) \\\\

A_{22} &=\left(\begin{array}{ll}

5 & 4 \\

1 & 1

\end{array}\right) \\

& \Rightarrow A_{22}^{-1}=\frac{1}{1}\left(\begin{array}{cc}

1 & -4 \\

-1 & 5

\end{array}\right) \\

& =\left(\begin{array}{cc}

1 & -4 \\

-1 & 5

\end{array}\right) \\\\

A_{33} &=(2) \\

& \Rightarrow A_{33}^{-1}=\left(\frac{1}{2}\right) \\

\text{Therefore,} \\

A^{-1} &=\left[\begin{array}{cc|cc|c}

2 / 3 & 1 / 3 & 0 & 0 & 0 \\

-1 / 3 & 2 / 3 & 0 & 0 & 0 \\

\hline 0 & 0 & 1 & -4 & 0 \\

0 & 0 & -1 & 5 & 0 \\

\hline 0 & 0 & 0 & 0 & 1 / 2

\end{array}\right] \text{.}

\end{aligned}

Gosh, that was way easier than having to use the inverse algorithm for such a monster-size matrix.

Block diagonal matrices sure do come in handy, don’t they?

In this video lesson, you will:

- Learn all about partitioned matrices

- Perform operations such as addition and subtraction as well as block multiplication

- Compute inverses and solve equations

Get ready to dive in and explore!

Video Tutorial w/ Full Lesson & Detailed Examples

Get access to all the courses and over 450 HD videos with your subscription

Monthly and Yearly Plans Available

Still wondering if CalcWorkshop is right for you?

Take a Tour and find out how a membership can take the struggle out of learning math.