Did you know that you can factor a matrix?

Jenn, Founder Calcworkshop®, 15+ Years Experience (Licensed & Certified Teacher)

Just like in algebra, we learned how to express a polynomial in terms of a product of two or more factors. We can similarly define a matrix as a product of two matrices.

Defining Matrix Factorization

Matrix Factorization, or matrix decomposition, is the process of taking a matrix and decomposing it into a product of two triangular matrices.

Explaining Triangular Matrices

Now a triangular matrix is a special kind of square

Understanding LU Decomposition

And LU Decomposition is a factorization of a nonsingular square matrix A (i.e., A is invertible) such that

Applications of Matrix Factorization

But why do we care? What’s the point of factoring a matrix?

This procedure is motivated by the fairly common industrial and business problem of solving a sequence of equations, all with the same coefficient matrix, as well as in engineering applications, partial differential equations for various force functions, and is even considered a better way to implement Gauss elimination (REF).

Step by Step LU Decomposition

Alright, now it’s time to see how LU decomposition works. To fully understand these steps, sometimes called the matrix factorization algorithm, it is best illustrated through an example.

Find the LU factorization of the matrix

First, we will find the Upper Matrix (U) using row operations. But there’s a bit of a twist… we do not care about getting ”

Next, we will use our row operation command lines from our U-matrix and our always important Identity matrix to find our lower triangular matrix

Our first command was

Our second command was

Our third command was

After completing each elementary matrix, we can say

Therefore,

And we can verify our decomposition by checking

Not bad, right?

I should point out that there are actually two methods for finding LU factorization, and we will compare and contrast their similarities and differences in our video lesson.

LU Factorization in Solving Linear Systems

Okay, so while it’s great to know that we can decompose a matrix into a product of two triangular matrices, LU factorization is most useful when solving systems of linear equations.

Consider the matrix equation

Solving Systems of Linear Equations using LU Factorization

Consequently, if we solve for

Example of LU Factorization in a Linear System

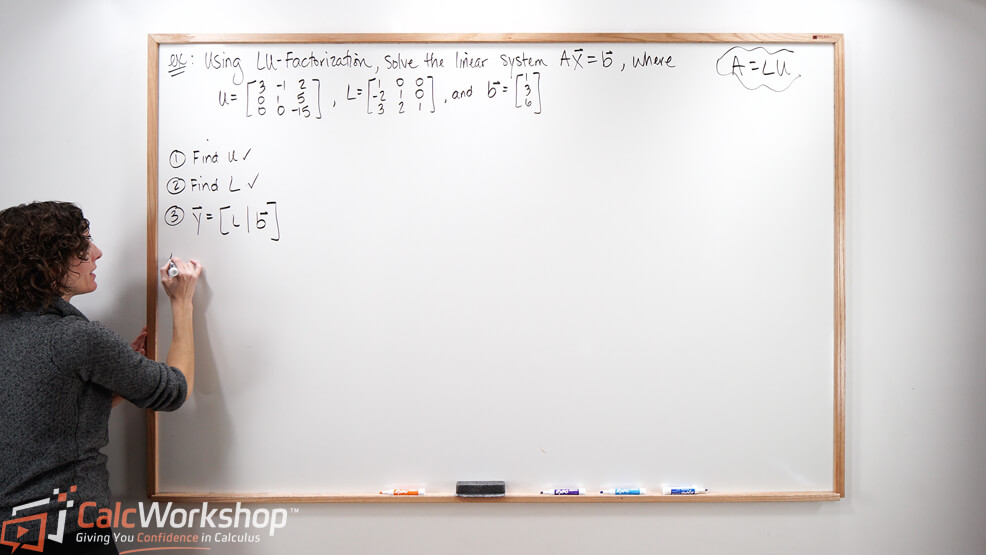

Alright, let’s see this in action to help us make sense of this process.

We’ll use LU factorization to solve the linear system in the following format:

First, we will find our upper triangular matrix (U) and lower triangular matrix (L) using the steps seen above.

Next, we solve the system

By using forward substitution, we can solve for the missing y-variables

Thus,

Now, it’s time to find the original system, so we solve

This time we will use backward substitution to solve of

Next Steps

In this video lesson, you will:

- Learn the two methods for LU factorization

- Use matrix decomposition to solve matrix equations

Get ready for a fun learning experience!

Video Tutorial w/ Full Lesson & Detailed Examples

Get access to all the courses and over 450 HD videos with your subscription

Monthly and Yearly Plans Available

Still wondering if CalcWorkshop is right for you?

Take a Tour and find out how a membership can take the struggle out of learning math.