Eigenvalues and Eigenvectors are useful throughout pure and applied mathematics and are used to study differential equations and continuous dynamical systems.

Jenn, Founder Calcworkshop®, 15+ Years Experience (Licensed & Certified Teacher)

In fact, eigenvalues provide essential information in engineering design and machine learning AI technology and arise naturally in such fields as physics and chemistry.

And we can see its uses in the frequencies used in electrical systems, oscillations and frequencies for structures such as bridges and buildings, and the tuning of instruments to make things more pleasant to the ear or dampen noise and vibrations.

So, what is an eigenvalue and an eigenvector anyway?

Before we get to the formal definition, let’s do some exploring.

Understanding Eigenvalues and Eigenvectors with an Example

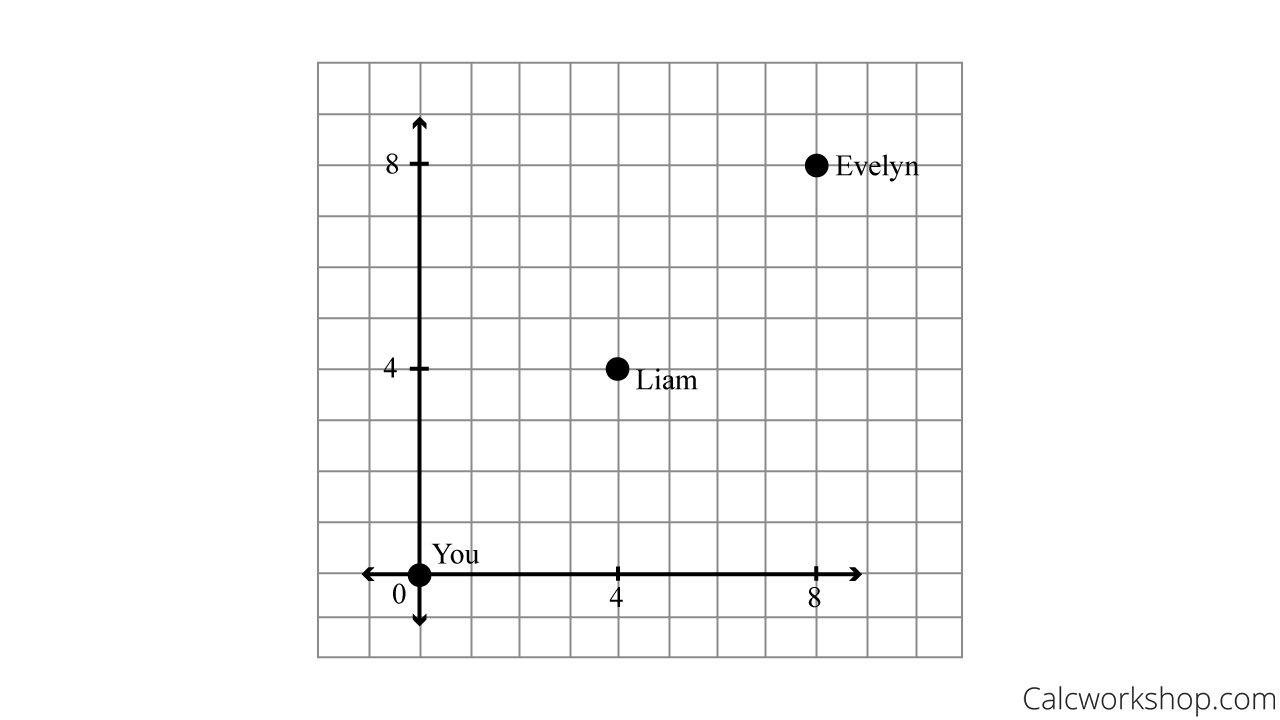

Suppose you live at the origin

Exploring Eigenvectors

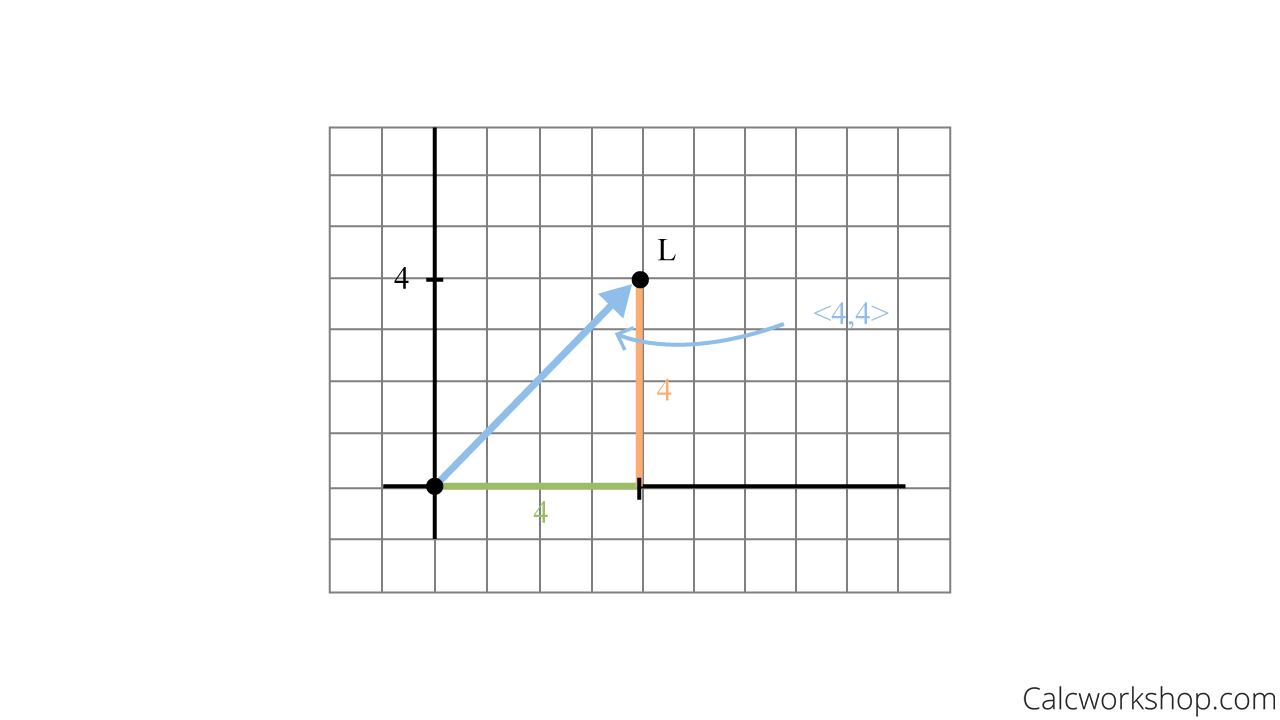

To get to Liam’s house, you must travel 4 units to the right along the

Creating Eigenvectors

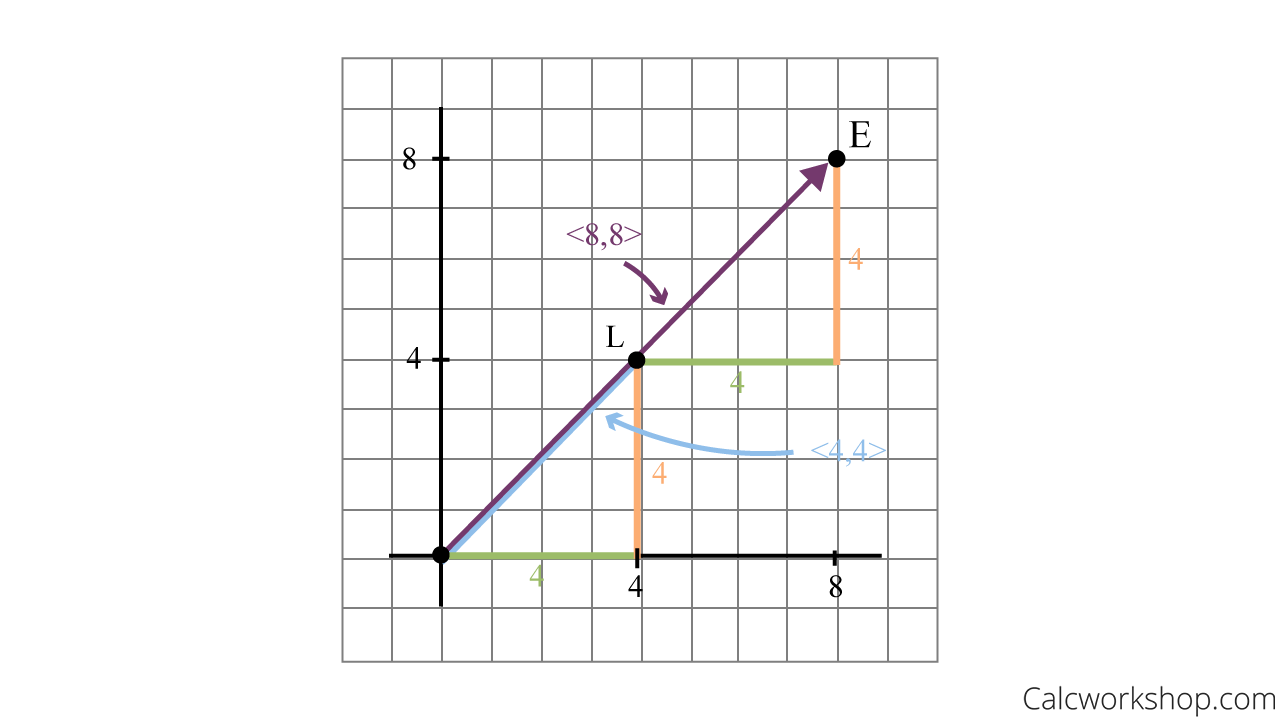

Now, if you and Liam then want to visit Evelyn, you must travel another 4 units to the right along the

But observe how these vectors are related.

The second vector from Liam’s house to Evelyn’s house first vector is found by scaling the first vector from your house to Liam’s house by 2. As

Colinear & Scalar Vectors

Notice that all three houses are collinear (on the same line), and their distances are just scalar multiples of each other. Hence, after a transformation (i.e., walking/translating from one house to another), the direction of the vector remains unchanged. Just the length (magnitude) is different.

The scaling is the eigenvalue, and the resulting vector is called the eigenvector.

Formal Definitions of Eigenvalues and Eigenvectors

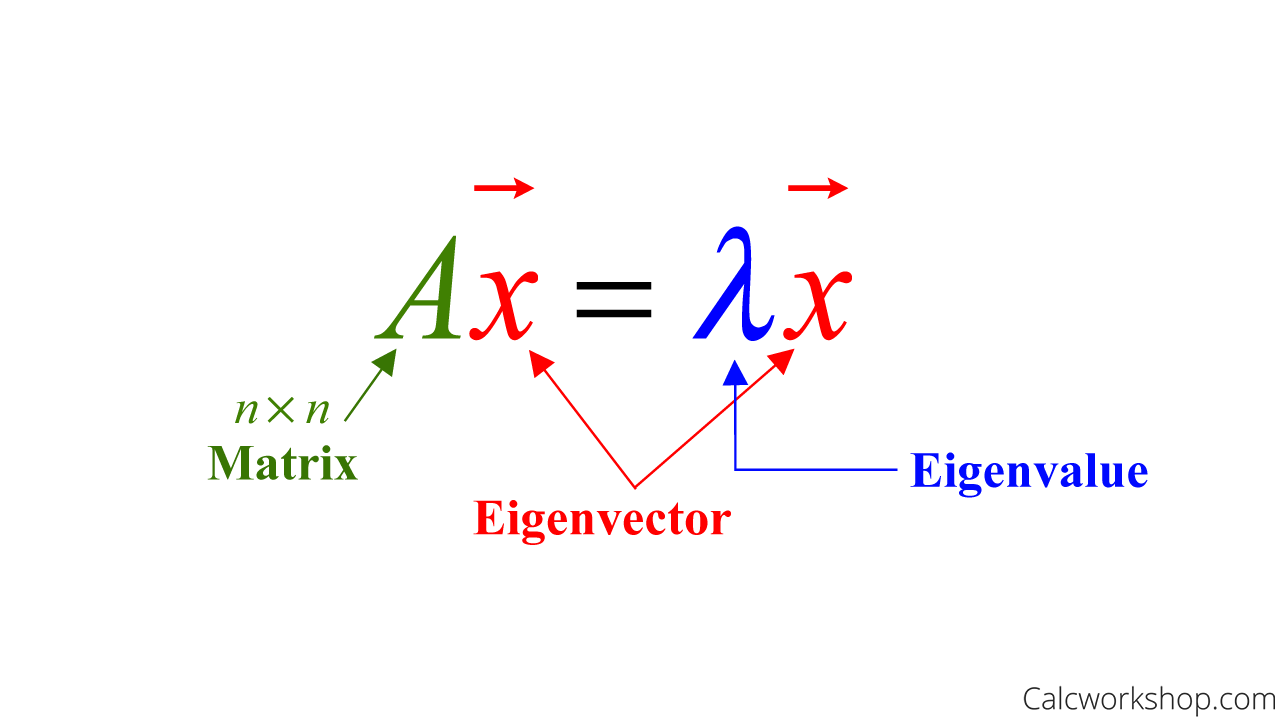

So, an eigenvector of an

Eigenvalue Eigenvector (Formula Definition)

Key Takeaways and Fun Facts

Here are three big takeaways from this important definition.

First, eigenvalues and eigenvectors only apply to square matrices.

Second, eigenvectors cannot be the zero vector, as they are defined as nonzero, but eigenvalues which are scalars can be any real number, including zero.

Third, an eigenvector of

And here’s a fun fact: In German, the prefix “eigen” means “self” or “characteristic.” Thus, an eigenvector is a vector that is a multiple of itself by the matrix transformation

Practical Examples: Verifying Eigenvectors and Finding Eigenvalues

Okay, so let’s put all this information into action with a few examples.

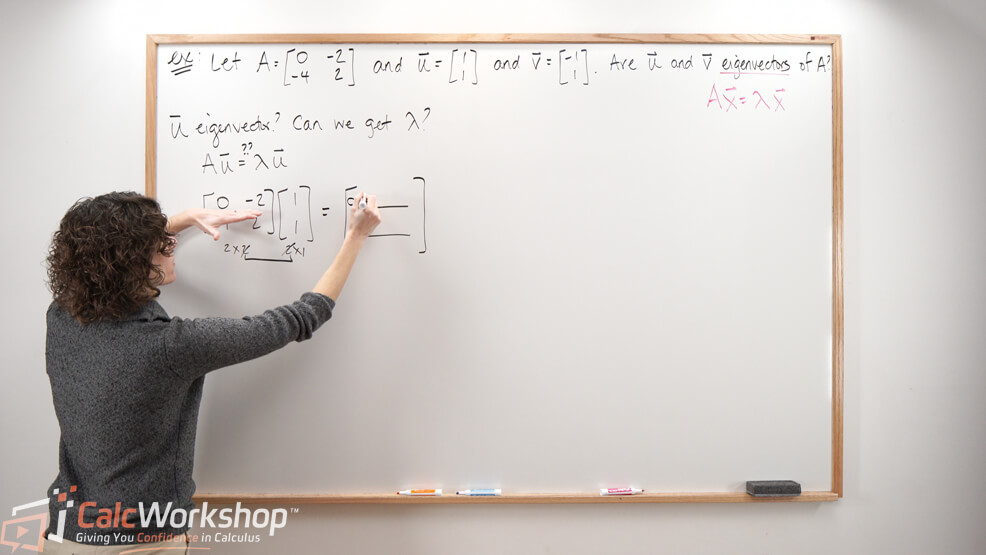

Let

Yes, this means that

Now it’s time to check if

Sadly, Av does not yield a multiple of

Alright, so when we’re given a vector, we can verify whether or not it’s an eigenvector and find its eigenvalue, as the example above nicely shows.

Finding Eigenvectors Given an Eigenvalue

But what if we aren’t given an eigenvalue and are asked to find the eigenvector?

For example, let’s show that 7 is an eigenvalue of

First, we will transform our formula

Now let’s make our substitution for

Next, we solve the equation for

So, every vector of the form

Introduction to Eigenspaces

In fact, what we have just found is the basis for the corresponding eigenspace.

We’ve just observed that finding the eigenvectors for a given eigenvalue involves solving a homogeneous system of equations. And the subspace

Cool, right?

Next Steps

In this lesson, you will:

- Examine definitions, theorems, and properties

- Learn how to find corresponding eigenvalues given an eigenvector

- Discover how to find an eigenvector given its corresponding eigenvalue

- Find a basis for the corresponding eigenspace

Jump right in and start exploring!

Video Tutorial w/ Full Lesson & Detailed Examples

Get access to all the courses and over 450 HD videos with your subscription

Monthly and Yearly Plans Available

Still wondering if CalcWorkshop is right for you?

Take a Tour and find out how a membership can take the struggle out of learning math.