What if we don’t want to map from \(\mathbb{R}^{n}\) to \(\mathbb{R}^{m}\) but instead, we want to find a representation for any linear transformation between two finite-dimensional vector spaces. To do this, we must combine powers: eigenvectors and linear transformations.

Jenn, Founder Calcworkshop®, 15+ Years Experience (Licensed & Certified Teacher)

If you may recall from our previous studies of transformations, if

\begin{aligned}

T: \mathbb{R}^{n} & \rightarrow \mathbb{R}^{m}

\end{aligned}

is a linear transformation, then there is an \(m \times n\) matrix \(\mathrm{A}\) such that

\begin{aligned}

\mathrm{T}(\mathrm{x}) & = Ax

\end{aligned}

for all \(\mathrm{x}\) in \(\mathbb{R}^{n}\), where

\begin{aligned}

A & =\left[\begin{array}{llll}T\left(\overrightarrow{e_{1}}\right) & T\left(\overrightarrow{e_{2}}\right) & \cdots & T\left(\overrightarrow{e_{n}}\right)\end{array}\right]

\end{aligned}

From Standard Basis to Coordinate Vectors

As we have seen, using the standard basis has been most convenient when performing a matrix transformation. But it comes up lacking when we want to map between vector spaces.

So, rather than using the standard basis, such that

\begin{aligned}

\vec{x} & \mapsto A \vec{x}

\end{aligned}

we will use the mapping

\begin{aligned}

\vec{u} & \mapsto D \vec{u}

\end{aligned}

which is essentially the same mapping just viewed from a different perspective.

How?

By representing vectors by their coordinate vectors for a basis!

Okay, let

\begin{aligned}

\mathrm{V} & \text{ be an } \mathrm{n}\text{-dimensional vector space,} \\

\mathrm{W} & \text{ be an m-dimensional vector space and } \\

\mathrm{T} & \text{ be the linear transformation from } \mathrm{V} \text{ to } \mathrm{W}

\end{aligned}

\begin{aligned}

T: V & \rightarrow W

\end{aligned}

Now, if

\begin{aligned}

B & =\left\{\vec{b}_{1}, \ldots, \overrightarrow{b_{n}}\right\}

\end{aligned}

be an ordered basis for \(\mathrm{V}\) and

\begin{aligned}

C & =\left\{\overrightarrow{c_{1}}, \ldots, \overrightarrow{c_{n}}\right\}

\end{aligned}

be the ordered basis for W, then we can express each vector \(\vec{x}\) in \(\mathrm{V}\) as a linear combination

\begin{aligned}

\vec{x} & =r_{1} \overrightarrow{b_{1}}+\cdots+r_{n} \overrightarrow{b_{n}}

\end{aligned}

for unique scalar weights \(r\).

Thus, we can represent the vector \(\vec{x}\) in \(\mathrm{V}\) by its coordinate vector in \(\mathbb{R}^{n}\) as

\begin{aligned}

[\vec{x}]_{B} & =\left[\begin{array}{c}

r_{1} \\

\vdots \\

r_{n}

\end{array}\right]

\end{aligned}

Likewise, we can represent each vector \(\vec{y}\) in \(\mathrm{W}\) by its coordinate vector

\begin{aligned}

[\vec{y}]_{C} & \text{ in } \mathbb{R}^{m}

\end{aligned}

Matrix Representation of a Linear Transformation

Therefore, if we want to find a \(m \times n\) matrix \(\mathrm{M}\) such that the linear transformation \(\mathrm{T}\) is equivalent to the coordinate vectors of \(M\) then

\begin{aligned}

[T(\vec{x})]_{C} & =M[\vec{x}]_{B}

\end{aligned}

where

\begin{aligned}

M & =\left[\left[T\left(\vec{b}_{1}\right)\right]_{C} \quad\left[T\left(\vec{b}_{2}\right)\right]_{C} \quad \cdots\left[T\left(\overrightarrow{b_{n}}\right)\right]_{C}\right]

\end{aligned}

The matrix \(\mathrm{M}\) is a matrix representation of \(\mathrm{T}\) and is called the matrix for \(\mathrm{T}\) relative to the basis \(\mathrm{B}\) and \(\mathrm{C}\).

Alright, this is great and all, but how does this really work? I’m confused.

Here’s how this work.

The Power of Diagonalization

In a problem involving \(\mathbb{R}^{n}\), a linear transformation \(T\) typically appears first as a matrix transformation. But if \(\mathrm{A}\) is diagonalizable, then there is a basis \(\mathrm{B}\) for \(\mathbb{R}^{n}\) consisting of eigenvectors of \(\mathrm{A}\). In this case, the B-matrix for \(\mathrm{T}\) is diagonal. Therefore, by diagonalizing \(\mathrm{A}\) we are in essence finding a diagonal matrix representation of \(\vec{x} \mapsto \overrightarrow{A x}\)

So, if \(A=P D P^{-1}\), as we learned in our Diagonalization video, where D is a diagonal \(n \times n\) matrix and if \(B\) is the basis for \(\mathbb{R}^{n}\) formed from the columns of \(P\), which represent the eigenvectors of \(\mathrm{A}\), then \(\mathrm{D}\) is the B-matrix for the transformation.

Example: Finding the B-Matrix with Eigenvectors

For example, suppose \(T: \mathbb{R}^{2} \rightarrow \mathbb{R}^{2}\) by

\begin{aligned}

T(\vec{x}) & =A \vec{x}

\end{aligned}

where

\begin{aligned}

A & =\left[\begin{array}{cc}2 & 7 \\ 1 & -4\end{array}\right]

\end{aligned}

Let’s find a basis B for \(\mathbb{R}^{2}\) with the property that the \(\mathrm{B}\)-matrix for \(\mathrm{T}\) is a diagonal matrix.

First, we know that

\begin{aligned}

A & =P D P^{-1}

\end{aligned}

so we will need to find our D matrix.

\begin{aligned}

\operatorname{det}\left[\begin{array}{cc}

2-\lambda & 7 \\

1 & -4-\lambda

\end{array}\right] & = 0 \\

(2-\lambda)(-4-\lambda)-7 & = 0 \\

\lambda^{2} + 2 \lambda – 15 & = 0 \\

(\lambda-3)(\lambda+5) & = 0 \\

\lambda & = 3, -5 \\

D & = \left[\begin{array}{cc}

3 & 0 \\

0 & -5

\end{array}\right]

\end{aligned}

Now we will find our \(P\) matrices consisting of the eigenvectors of \(A\).

\begin{aligned}

\lambda & = 3 \\\\

\left[\begin{array}{ccc}

2-3 & 7 & 0 \\

1 & -4-3 & 0

\end{array}\right] & = \left[\begin{array}{ccc}

-1 & 7 & 0 \\

1 & -7 & 0

\end{array}\right] \\

\sim\left[\begin{array}{ccc}

1 & -7 & 0 \\

0 & 0 & 0

\end{array}\right] & \Rightarrow \vec{x} = x_{2}\left[\begin{array}{c}

7 \\

1

\end{array}\right] \\\\

\lambda & = -5 \\\\

\left[\begin{array}{ccc}

2-(-5) & 7 & 0 \\

1 & -4-(-5) & 0

\end{array}\right] & = \left[\begin{array}{lll}

7 & 7 & 0 \\

1 & 1 & 0

\end{array}\right] \\

\sim\left[\begin{array}{lll}

1 & 1 & 0 \\

0 & 0 & 0

\end{array}\right] & \Rightarrow \vec{x} = x_{2}\left[\begin{array}{c}

-1 \\

1

\end{array}\right] \\\\

P & = \left[\begin{array}{cc}

7 & -1 \\

1 & 1

\end{array}\right]

\end{aligned}

Now, all that’s left is to recognize that the D matrix is the B-matrix in disguise!!!

It’s true! The D matrix is the B-matrix for \(\mathrm{T}\) when P represents the eigenvectors of \(\mathrm{A}\).

Awesome, right?

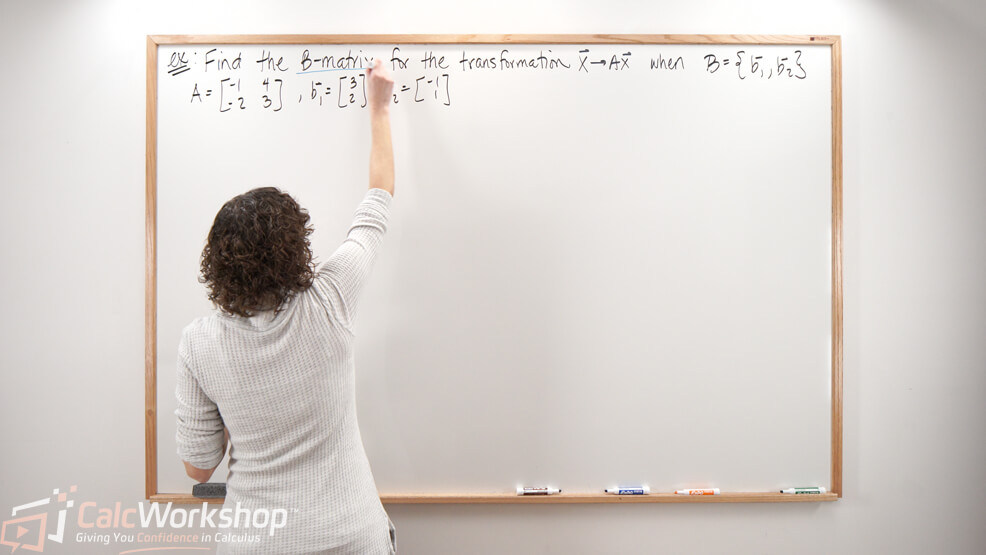

Example: Finding the B-Matrix with Given Matrices

Additionally, we can use A and P matrices to help us find our B-matrix.

Assume

\begin{aligned}

A & =\left[\begin{array}{cc}4 & -9 \\ 4 & 8\end{array}\right]

\end{aligned}

and

\begin{aligned}

\overrightarrow{b_{1}} & =\left[\begin{array}{l}3 \\ 2\end{array}\right]

\end{aligned}

and

\begin{aligned}

\overrightarrow{b_{2}} & =\left[\begin{array}{l}2 \\ 1\end{array}\right]

\end{aligned}

Let’s find our B-matrix.

Well, we’re given A and \(\mathrm{P}\), so what we need to find is \(\mathrm{D}\), which is really our B-matrix for the linear transformation.

\begin{aligned}

A & =\left[\begin{array}{cc}

4 & -9 \\

4 & 8

\end{array}\right]

\end{aligned}

and

\begin{aligned}

P & =\left[\begin{array}{ll}

\vec{b}_{1} & \overrightarrow{b_{2}}

\end{array}\right]=\left[\begin{array}{ll}

3 & 2 \\

2 & 1

\end{array}\right]

\end{aligned}

And if we resolve

\begin{aligned}

A & =P D P^{-1}

\end{aligned}

for \(\mathrm{D}\), which is our B-matrix, we get

\begin{aligned}

D & =P^{-1} A P

\end{aligned}

Therefore

\begin{aligned}

B-\text { matrix } & =P^{-1} A P \\

& =\underbrace{\frac{1}{-1}\left[\begin{array}{cc}

1 & -2 \\

-2 & 3

\end{array}\right]}_{P^{-1}} \underbrace{\left[\begin{array}{cc}

4 & -9 \\

4 & 8

\end{array}\right]}_{A} \underbrace{\left[\begin{array}{ll}

3 & 2 \\

2 & 1

\end{array}\right]}_{P} \\

& =\left[\begin{array}{cc}

-2 & 1 \\

0 & -2

\end{array}\right]

\end{aligned}

See, not so bad!

Next Steps

In this lesson, you will:

- Understand how diagonalization applies to linear transformation using coordinate vectors

- Learn how the matrix of a linear transformation associates with ordered bases \(\mathrm{B}\) and \(\mathrm{C}\)

- Discover how the matrix for \(\mathrm{T}\) relative to \(\mathrm{B}\) (B-Matrix) is used for transforming polynomials

- Make connections with the diagonal matrix representation and similar matrices, along with eigenvalues (D-Matrix or B-Matrix) and eigenvectors (P matrix or basis)

Dive in and start exploring!

Video Tutorial w/ Full Lesson & Detailed Examples

Get access to all the courses and over 450 HD videos with your subscription

Monthly and Yearly Plans Available

Still wondering if CalcWorkshop is right for you?

Take a Tour and find out how a membership can take the struggle out of learning math.